Symbolica is a symbolic manipulation toolkit and a computer algebra system. In this first blog post I will try to explain what these terms mean, what the use is of symbolic manipulation and what Symbolica will contribute to the ecosystem.

Computer algebra

Almost anyone has used a computer to do mathematics, be it as simple as doing arithmetic on a calculator app. Already more advanced usage is calling geometric functions such as the sine and cosine with a number and getting a number out.

sin(0.1) = 0.09983341664682815To perform this computation the computer needs some understanding1 of what the sine function is and how it can be evaluated for any input value. For small arguments2, one could for example build in the Taylor series of the function:

1 or a hardcoded table and interpolation

2 using symmetries the argument can always be made small

\[ \begin{align*} \sin(x) &= x - \frac{x^3}{3!} + \frac{x^5}{5!} - \frac{x^7}{7!} + \ldots\\ \sin(0.1) &= 0.09983341664682541 \end{align*} \]

Even more knowledge is required to evaluate the sine exactly for input that is irrational:

\[ \sin(\pi / 4) = \frac{\sqrt{2}}{2} \]

First, the irrational number \(\pi\) cannot be represented by any number in hardware, and therefore must be represented by some more complicated data type (the same goes for \(\sqrt{2}\)).

A computer program that ‘understands’ mathematics is called a computer algebra system. You may have already used some, such as sympy, Mathematica, Maple, FORM, Sage, Matlab.

Symbolic manipulation

Some computer algebra systems only handle numerical input and make extensive use of linear algebra. Symbolica on the other hand works with mathematical expressions that contain symbols and rational numbers (no floating point numbers). For example: \[ f(x,g(y^{-4}, x y + 4))^5 \frac{123123}{192783821} \] is an expression that Symbolica understand and can manipulate.

The most important manipulation is matching a pattern and making replacements (see Section 5), just like Regex does for text3.

3 See an example regex here.

What if the expressions become huge?

Most symbolic manipulation toolkits can handle small expressions that easily fit in memory. But what happens when a computation requires expressions that go well beyond memory? Does such a case even happen? Absolutely!

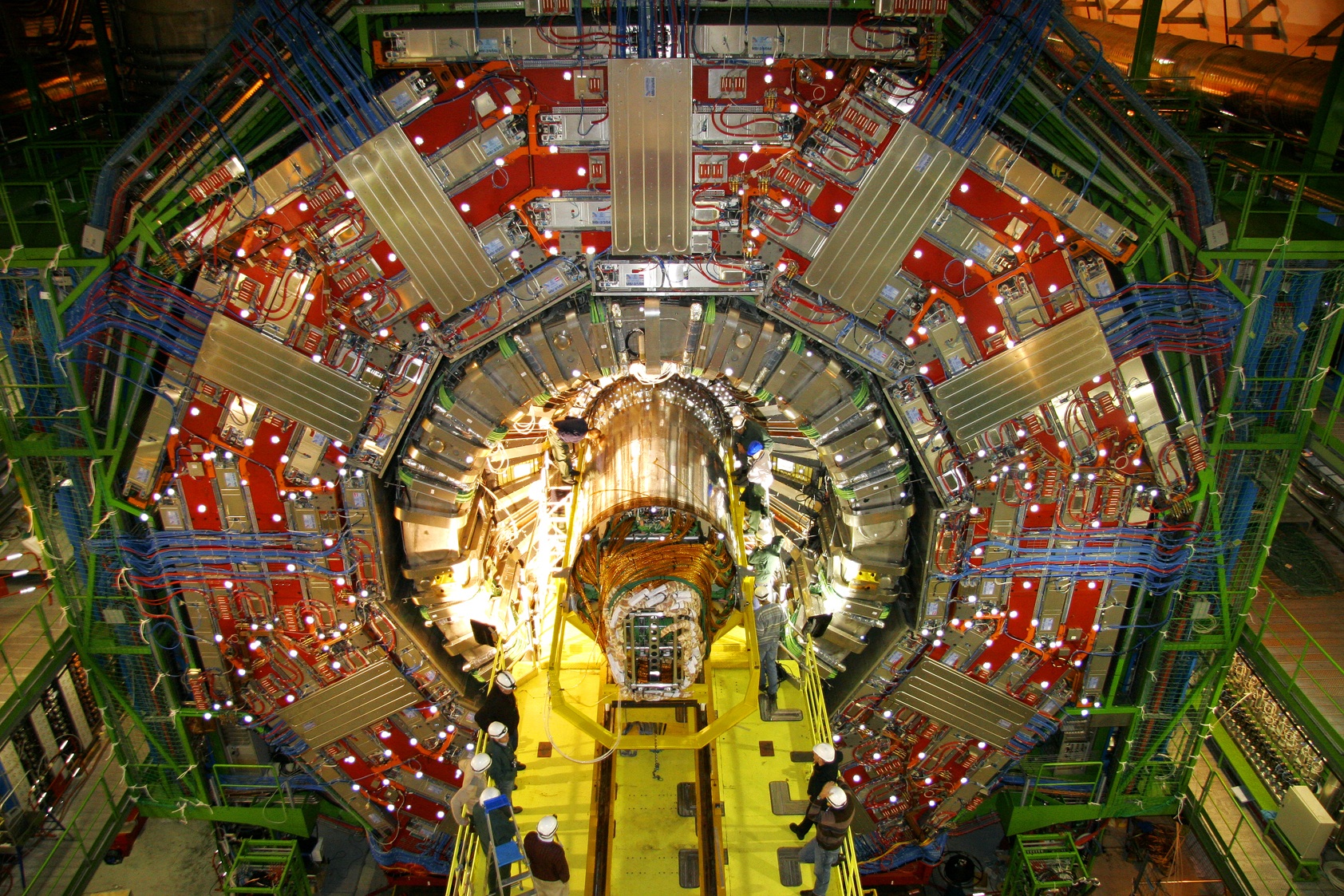

Most commonly, when doing Taylor expansions to higher and higher orders, an expression that started out small can become very large. One particular example comes from particle physics, where giant detectors measure new particles created from high-energy collision to great precision. To see if these measurements are compatible with the current best theory of nature, precise predictions have to be made too. In practice, these predictions are computed through a Taylor expansion of the Standard Model, like was done for the sin function above.

Feynman was able to describe the component of these expansions in terms of graphs, for which he got a Nobel prize. The graphs with the fewest number of edges contribute the most to the final predicition, and more complicated the graph contribute less. Below is an example of such a graph:

that contributes to the fifth-order correction of a gluon propagating (‘flying’) in space. One could read this graph as a gluon coming in from the left, splitting into other gluons that split and fuse again and finally resulting in a single gluon coming out.

This single graph represents an expression of more than 12 million terms and there are thousands of these graphs! In order to get a number out of these terms about 1 TB of memory is needed.

At this stage, if the symbolic manipulation toolkit is not very careful about managing memory, the computation will crash your computer due to running out of memory or it will start swapping memory to disk, which is extremely slow.

In the early 90s, the symbolic manipulation toolkit FORM was released that treated a mathematical expression as a sorted list of terms and most operations could only affect a single term. This way, operations over terms could be parallelized, and terms that were processed would be written to disk. Terms that can be merged (such as \(x+x \rightarrow 2x\)) are then merged using a merge sort. In such a setup, memory is no longer the limiting factor but disk space. Sadly FORM risks being unmaintained.

“Crucial Computer Program for Particle Physics at Risk of Obsolescence” - Quanta Magazine

This spurred the creation of Symbolica, which will be funded by multiple research institutes and by companies.

Symbolica

Symbolica takes the idea of having a mode that operates term-by-term from FORM and modernizes just about everything else. Symbolica will foremost be a library that can be used from many common languages such as Python and C++. For example, here is a Python code that uses the symbolica package:

from symbolica import Expression, Function

x, y, z = Expression("x"), Expression("y"), Expression("z")

f = Function("f")

e = x**2 + f(x,y)*y + x*z/y

print('e =', e)

for i, t in enumerate(e):

print('term {}={}'.format(i, t))that creates a new expression e that reads \(x^2 + f(x,y)y + x z / y\). Imagine that e does not fit in memory, then the iteration over its terms using the enumerate will read in a term from disk and will write it to disk again at the end of the block, freeing the memory.

In order to make sure that operations on terms do not run out of memory since the output is too large, the operations need to yield every term one after the other. In other words, the operations need to be generators/iterators. Take the expansion operator for example:

from symbolica import Expression

x = Expression("x")

e = (x + 1)^100

e.expand() # a generatorgenerates 101 terms, but they should be yielded one by one and all terms can be collected as:

# new_exp = list(e.expand()) # may not fit in memory

new_exp = Expression.from_iter(e.expand()) # will automatically write to disk if neededNext, let’s look at an example of pattern matching.

An example of pattern matching

Pattern matching lies at the heart of a symbolic manipulation toolkit. For convenience, let us denote patterns with a useful scripting language. Any identifier is a literal if it is not followed by a ?, otherwise it is a wildcard. For example x? can match any variable, and ?a can match any number of arguments of a function. Then we can write:

e = f(5)

e.id("f(x?)", "f(x? + 1)")The pattern f(x?) matches a function f with one argument that could be anything and whose match is called x?. The second argument is the expression that the matched part should be substitued with. In this case our input is f(5) and so the pattern f(x?) matches f(5) and it sets x? = 5. Then on the right-hand side it replaces the match into the substituted expression, yielding f(5 + 1). This is then normalised to f(6).

And example where pattern matching is convenient is Laporta’s algorithm. Coming back to the Feynman graphs shown before, there are relations that can be derived to express graphs as sums as other / simpler graphs. Imagine a graph that has an (integer) \(n_i > 0\) associated at each of its edge \(i\):

and writing the \(n_i\) as arguments of a function \(I\), we have:

\[ \begin{align*} I(n_1,n_2,n_3,n_4,n_5) =& \frac{1}{D-n_1-n_4- 2 n_5} \biggl[\\ +& n_1 I(n_1 + 1, n_2, n_3, n_4, n_5 - 1)\\ +& n_1 I(n_1 + 1, n_2 - 1, n_3, n_4, n_5)\\ +& n_4 I(n_1, n_2, n_3, n_4+1, n_5 - 1)\\ +& n_4 I(n_1, n_2, n_3 - 1, n_4 + 1, n_5) \biggr] \end{align*} \]

A careful inspection shows that for any graph, repeated application if this rule will make sure that either \(n_2\), \(n_3\) or \(n_5\) will end up as 0, which is considered a simpler graph. With a structure like this, one may be tempted to use Symbolica for the rational coefficients and store the indices, as sketched in the following Python script:

# start with one graph with n_i = 2,1,1,1,2 and a coefficient of 1

graphs = [([2,1,1,1,2], Expression.parse("1"))]

while len(graphs) > 0:

new_graphs = []

for graph, coeff in graphs:

# apply rewrite rule

prefactor = Expression.parse("1/(D-{n1}-{n4}-2*{n5})"

.format(n1 = c[0], n4 = c[3], n5 = c[4]))

out_1 = ([c[0] + 1,c[1],c[2],c[3],c[4] - 1], c * c[0] * prefactor)

if all(c > 0 for c in out_1[0]):

new_graphs.append(out_1)

else:

print("Reduced: {}", out_1)

# other 3 terms are suppressed

new_graphs = graphThis setup has the disadvantage that many graphs with the same indices \(n_i\) are created during the rule application and they are not summed up, therefore doing extra work and taking up more space. Instead, the entire algorithm can be applied inside Symbolica:

from symbolica import Expression

I = Function("I")

e = I(2,1,1,1,2)

e.id(repeat, "I(n1?{>0},n2?{>0},n3?{>0},n4?{>0},n5?{>0})", " 1/(D-n1-n4-2*n5)*(n1 * I(n_1 + 1, n_2, n_3, n_4, n_5 - 1) + ... )")where the id statment is repeated until it matches no longer and {>0} is a condition on a pattern that only matches when the number is more than 0.

Make sure to read the blog post about how many algorithms can be cast into repeated pattern matches on expressions!

I hope you liked the quick sample of Symbolica. Much more will follow in future blogs.